Applications Of Vector Spaces

When an object is made up of multiple components it is often useful to represent the object as a vector, with one entry per component. The examples discussed in this section involve molecules, which are made up of atoms, and text documents, which are made up of words. In some cases equations involving the objects give rise to vector equations. In other instances there are reasons to perform operations on the vectors using matrix algebra.

Affine And Projective Spaces

Affine spaceProjective spaceaffine planeRx

Roughly, affine spaces are vector spaces whose origins are not specified. More precisely, an affine space is a set with a free transitive vector space action. In particular, a vector space is an affine space over itself, by the map

- V × V V, a + v.

If W is a vector space, then an affine subspace is a subset of W obtained by translating a linear subspace V by a fixed vector x W this space is denoted by x + V and consists of all vectors of the form x + v for v V. An important example is the space of solutions of a system of inhomogeneous linear equations

- Ax = b

generalizing the homogeneous case above, which can be found by setting b = 0 in this equation. The space of solutions is the affine subspace x + V where x is a particular solution of the equation, and V is the space of solutions of the homogeneous equation .

The set of one-dimensional subspaces of a fixed finite-dimensional vector space V is known as projective space it may be used to formalize the idea of parallel lines intersecting at infinity.Grassmannians and flag manifolds generalize this by parametrizing linear subspaces of fixed dimension k and flags of subspaces, respectively.

Example Of Vector Space

In almost all the literature that I have seen, one of the examples of vector space is as follows:

Set of all real-valued functions $f$ defined on the real line

what confuses me here is that the word “linear functions” should be used instead of just “functions” because I think we also have non-linear functions and they do not follow the rule of addition and multiplication as:

$ =f + g $ and $ = k f $

thus, non-linear functions cannot make a vector space.

am I right or wrong?

You’re confusing adding the functions with adding their inputs.A linear function would have the property $f = f + f$. However, for a vector space, you add the functions. For example, let $f = x^2$ and $g = x^3$, then $ = f + g = x^2 + x^3$.

- 2$\begingroup$o i get it, when we consider the set of all functions, the addition and scalar multiplication will always be satisfied by f and g, thanx$\endgroup$

The functions do not need to be linear. You are confusing the fact that $f=f+f$ only holds for linear functions, but this is not required here. What is meant is that the sum of two functions $f$ and $g$ is the function $$, which is given by $f+g$.

You can have non-linear functions in the vector space. Why do you say that $ = f + g$ and $ = kf$ are not true for non-linear functions? This is how one defines addition of the vectors and multiplication with scalars. One can for instance have the vector space of continuous functions on some space.

Maybe you are confusing it with $f = kf$ and $f = f + f $?

Recommended Reading: Why Do I Hate My Birthday Psychology

Preparation For Linear Algebra

A linear vector space consists of a set of vectors or functions and the standard operations of addition, subtraction, and scalar multiplication. In solving ordinary and partial differential equations, we assume the solution space to behave like an ordinary linear vector space. A primary concern is whether or not we have enough of the correct vectors needed to span the solution space completely. We now investigate these notions as they apply directly to two-dimensional vector spaces and differential equations.

We use the simple example of the very familiar two-dimensional Euclidean vector space R2 this is the familiar plane. The two standard vectors in the plane are traditionally denoted as i and j. The vector i is a unit vector along the x-axis, and the vector j is a unit vector along the y-axis. Any point in the plane can be reached by some linear combination, or superposition, of the two standard vectors i and j. We say the vectors span the space. The fact that only two vectors are needed to span the two-dimensional space R2 is not coincidental three vectors would be redundant. One reason for this has to do with the fact that the two vectors i and j are linearly independentthat is, one cannot be written as a multiple of the other. The other reason has to do with the fact that in an n-dimensional Euclidean space, the minimum number of vectors needed to span the space is n.

is that both c1 and c2 are zero. Otherwise, the vectors are said to be linearly dependent.

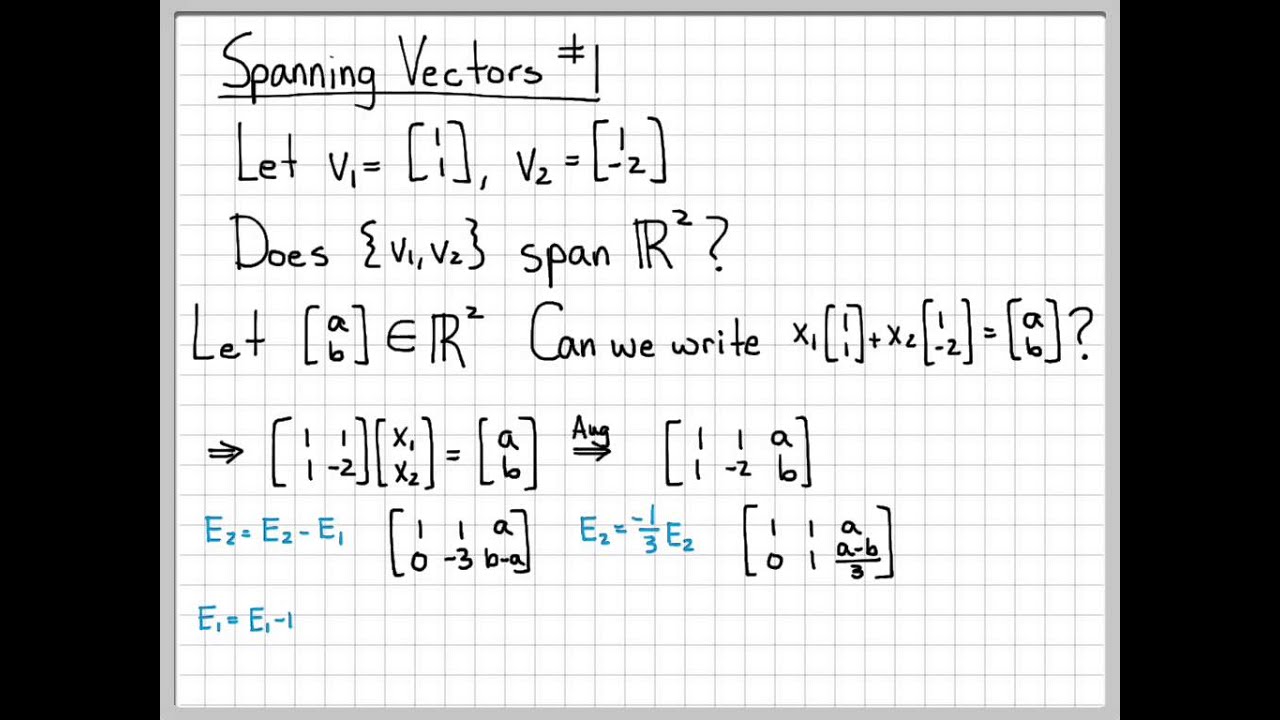

Know If A Vector Is In The Span

How to know if a vector is in the Span ?

Let< math> A =\begin\begin& & \\v_1 & \dots & v_n \\& & \end\end< /math>

Using the linear-combinations interpretation of matrix-vector multiplication, a vector x in Span can be written Ax.

Thus testing if b is in Span is equivalent to testing if the matrix equation Ax = b has a solution.

Don’t Miss: What Does Amu Mean In Chemistry

Composition Of A Linear Transformations

The compostion of a linear transformation is similar to the composition of a function in calculus.If T is a linear transformation from U to V and S is a linear transformation from V to W then the composition of S with T is the transformation or mapping ST defined by

T = S where u exists in U

The next theorem follows directly from the definition:Theorem 12:If T from U to V and S from V to Ware linear transformation then ST from U to W is a linear transformation.

A linear transformation T from V to W is invertibleif there exists a linear transformation T’ from W to Vsuch that

Properties of inverses: If T is a linear transformation from V to U then:1) If T is invertible then so is T’2) If T is invertible then it’s inverse is unique3) T is invertible if and only if ker= and im=W

Linear Algebra/definition And Examples Of Vector Spaces

- Remark 1.2

Because it involves two kinds of addition and two kinds of multiplication, that definition may seem confused. For instance, in condition 7 ” ( }=r\cdot }+s\cdot }} “, the first “” is the real number addition operator while the ” + ” to the right of the equals sign represents vector addition in the structure V . These expressions aren’t ambiguous because, e.g., r ” can only mean real number addition.

The best way to go through the examples below is to check all ten conditions in the definition. That check is written out at length in the first example. Use it as a model for the others. Especially important are the first condition ” v }+}} is in }} is in V “. These are the closure conditions. They specify that the addition and scalar multiplication operations are always sensible they are defined for every pair of vectors, and every scalar and vector, and the result of the operation is a member of the set .

- Example 1.3

is a vector space if the operations ” +

- 2 ) }}}+}}}=+y_}+y_}}\qquad r\cdot }}}=}}}}

We shall check all of the conditions.

- ( }}}+}=}}}}

- (

You May Like: Which Founding Contributors To Psychology Helped Develop Behaviorism

Linear Maps And Matrices

The relation of two vector spaces can be expressed by linear map or linear transformation. They are functions that reflect the vector space structure, that is, they preserve sums and scalar multiplication:

- f ) +\mathbf )=f+f} and f = a · f for all v and w in V, all a in F.

An isomorphism is a linear map f : V W such that there exists an inverse mapg : W V, which is a map such that the two possible compositionsf g : W W and g f : V V are identity maps. Equivalently, f is both one-to-one and onto . If there exists an isomorphism between V and W, the two spaces are said to be isomorphic they are then essentially identical as vector spaces, since all identities holding in V are, via f, transported to similar ones in W, and vice versa via g.

vxy

For example, the “arrows in the plane” and “ordered pairs of numbers” vector spaces in the introduction are isomorphic: a planar arrow v departing at the origin of some coordinate system can be expressed as an ordered pair by considering the x– and y-component of the arrow, as shown in the image at the right. Conversely, given a pair , the arrow going by x to the right , and y up turns back the arrow v.

Matrices are a useful notion to encode linear maps. They are written as a rectangular array of scalars as in the image at the right. Any m-by-n matrix A gives rise to a linear map from Fn to Fm, by the following

- x

or, using the matrix multiplication of the matrix A with the coordinate vector x:

First Example: Arrows In The Plane

The first example of a vector space consists of arrows in a fixed plane, starting at one fixed point. This is used in physics to describe forces or velocities. Given any two such arrows, v and w, the parallelogram spanned by these two arrows contains one diagonal arrow that starts at the origin, too. This new arrow is called the sum of the two arrows, and is denoted v + w. In the special case of two arrows on the same line, their sum is the arrow on this line whose length is the sum or the difference of the lengths, depending on whether the arrows have the same direction. Another operation that can be done with arrows is scaling: given any positive real numbera, the arrow that has the same direction as v, but is dilated or shrunk by multiplying its length by a, is called multiplication of v by a. It is denoted av. When a is negative, av is defined as the arrow pointing in the opposite direction instead.

The following shows a few examples: if a = 2, the resulting vector aw has the same direction as w, but is stretched to the double length of w . Equivalently, 2w is the sum w + w. Moreover, v = v has the opposite direction and the same length as v .

You May Like: Endpoint Geometry Example

Digression: Tensors And Tensor Fields

Thus, in terms of the Cartesian components, relations and can be written as

As mentioned above, the electric permittivity and magnetic permeability tensors reduce, in the case of an isotropic medium, to scalars and the above relations simplify to

It is not unusual for an optically anisotropic medium, with a permittivity tensor , to be characterized by a scalar permeability . In this book I use the SI system of units, in which the permittivity and permeability of free space are, respectively, 0 = 8.85 × 1012 C2 N1 m2 and 0 = 4 × 107 NA2.

In general, for linear media with time-independent properties, the following situations may be encountered: isotropic homogeneous media, for which and are scalar constants independent of r isotropic inhomogeneous media for which and are scalars but vary from point to point anisotropic homogeneous media where and are tensors independent of the position vector r and anisotropic inhomogeneous media in which and are tensor fields. As mentioned above, in most situations relating to optics one can, for simplicity, assume to be a scalar constant, 0.

One more constitutive equation holds for a conducting medium:

Erdoan S. uhubi, in, 2013

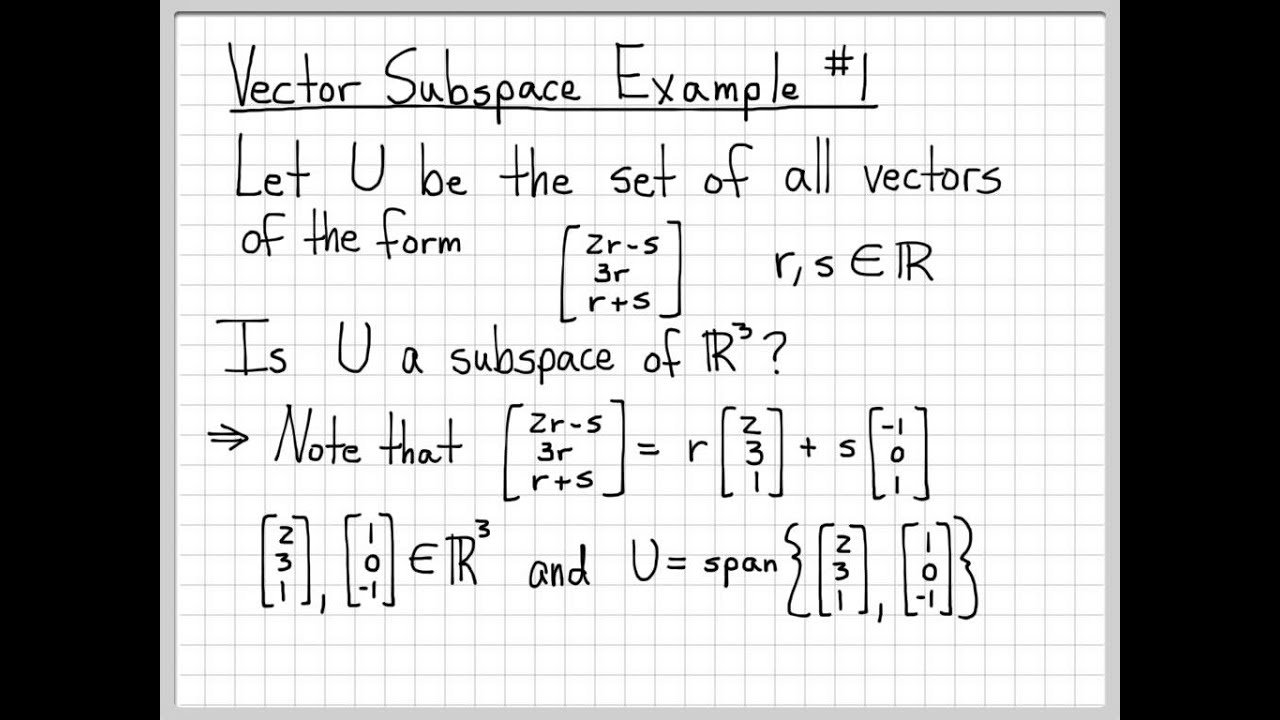

Testing If A Subset Of Vectors Of A Vector Space Gives A Subspace

Let \ be a vector space. Let \ be a set of vectors from \.How do we know if \ is a subspace of \?

First of all, that \ is a subset of \ does not automaticallymake it a subspace of \. For instance, if \ does not contain the zero vector, then it is not a vector space.

Of course, one can check if \ is a vector space by checking theproperties of a vector space one by one. But in this case, it isactually sufficient to check that \ is closed under vector additionand scalar multiplication as they are defined for \.

Read Also: Geometry Segment Addition Postulate Worksheet

Trivial Or Zero Vector Space

The simplest example of a vector space is the trivial one: , which contains only the zero vector . Both vector addition and scalar multiplication are trivial. A basis for this vector space is the empty set, so that is the 0-dimensional vector space over F. Every vector space over F contains a subspaceisomorphic to this one.

The zero vector space is conceptually different from the null space of a linear operator L, which is the kernel of L.

Alternative Formulations And Elementary Consequences

Vector addition and scalar multiplication are operations, satisfying the closure property: u + v and av are in V for all a in F, and u, v in V. Some older sources mention these properties as separate axioms.

In the parlance of abstract algebra, the first four axioms are equivalent to requiring the set of vectors to be an abelian group under addition. The remaining axioms give this group an F–module structure. In other words, there is a ring homomorphismf from the field F into the endomorphism ring of the group of vectors. Then scalar multiplication av is defined as ).

There are a number of direct consequences of the vector space axioms. Some of them derive from elementary group theory, applied to the additive group of vectors: for example, the zero vector 0 of V and the additive inverse v of any vector v are unique. Further properties follow by employing also the distributive law for the scalar multiplication, for example av equals 0 if and only if a equals 0 or v equals 0.

Also Check: Algebra Age Word Problems

A First Informal And Somewhat Restrictive Definition

Linear spaces are defined in a formal and very general way by enumerating the properties that the two algebraic operations performed on the elements of the spaces need to satisfy.

In order to gradually build some intuition, we start with a narrower approach, and we limit our attention to sets whose elements are matrices . Furthermore, we do not formally enumerate the properties of addition and multiplication by scalars because these have already been derived in previous lectures . After this informal presentation, we report a fully general and rigorous definition of vector space.

Definition Let be a set of matrices such that all the matrices in have the same dimension. is a linear space if and only if, for any two matrices and

In other words, when is a linear space, if you take any two matrices belonging to, you multiply each of them by a scalar, and you add together the products thus obtained, then you have a linear combination, which is also a matrix belonging to.

be the set of all column vectors whose entries are real numbers. Consider two vectors and as coefficients can be written asBut and are real numbers because products and sums of real numbers are also real numbers. Therefore, the two entries of the vectorare real numbers, which implies that the vector belongs to. Since this is true for any couple of coefficients and is a linear space.

Introduction To Linear Algebra

Linear algebra is the study of linear combinations. It is the study of vector spaces, lines and planes, and some mappings that are required to perform the linear transformations. It includes vectors, matrices and linear functions. It is the study of linear sets of equations and its transformation properties.

Linear Algebra Equations

The general linear equation is represented as

a1x1 + a2x2.+anxn = b

as represents the coefficients

xs represents the unknowns

b represents the constant

There exists a system of linear algebraic equations, which is the set of equations. The system of equations can be solved using the matrices.

It obeys the linear function such as

a1x1 +.+anxn

The most important topics covered in the linear algebra includes:

- Euclidean vector spaces

- Singular value decomposition

- Linear dependence and independence

Here, the three main concepts which are the prerequisite to linear algebra are explained in detail. They are:

- Vector spaces

- Linear Functions

- Matrix

All these three concepts are interrelated such that a system of linear equations can be represented using these concepts mathematically. In general terms, vectors are elements that we can add, and linear functions are the functions of vectors that include the addition of vectors

Also Check: How Do Noise Cancelling Headphones Work Physics