Difficulties With The Term Disorder

In recent years the long-standing use of term “disorder” to discuss entropy has met with some criticism. Critics of the terminology state that entropy is not a measure of ‘disorder’ or ‘chaos’, but rather a measure of energy’s diffusion or dispersal to more microstates. Shannon’s use of the term ‘entropy’ in information theory refers to the most compressed, or least dispersed, amount of code needed to encompass the content of a signal.

Clausiuss Definition Of Entropy

I enclose the word definition in inverted commas because Clausius did not really define entropy. Instead, he defined a small change in entropy for one very specific process. It should be added that, even before a proper definition of entropy was posited, entropy was confirmed as a state function, which means that whenever the macroscopic state of a system is specified, its entropy is determined .

Clausiuss definition, together with the Third Law of Thermodynamics led to the calculation of absolute values of the entropy of many substances.

Clausius started from one particular process the spontaneous flow of heat from a hot to a cold body. Based on this specific process, Clausius defined a new quantity which he called Entropy. Let d

) , identical to the entropy of an ideal gas at equilibrium. This also justifies the reference to the distribution which maximizes the SMI as the equilibrium distribution.

It is now crystal clear that none of the definitions of entropy hints on any possible time dependence of the entropy. This aspect of entropy stands out most clearly from the third definition.

It is also clear that all the equivalent definitions render entropy a state function meaning that entropy has a unique value for a well-defined thermodynamic system.

Why Does Entropy Increase When The Difference In Temperatures Is Decreased

From the Wikipedia article about Entropy :

In irreversible heat transfer, heat energy is irreversibly transferred from the higher temperature system to the lower temperature system, and the combined entropy of the systems increases.

If entropy were a measure of disorder, or randomness, or the amount of information needed to describe the microstates of a system, then it seems that when the difference in temperatures is high, entropy should be high. A temperature gradient in the systems is a form of disorder hence should be a sign of higher entropy.

So why does entropy increase after the heat transfer, as the combined system approaches a uniform temperature? It seems to me like a state with less randomness or disorder.

- $\begingroup$I changed the quote to another one from Wikipedia which expresses the same thing$\endgroup$ hb20007Jun 22, 2016 at 15:44

- $\begingroup$I thought that a temperature gradient would be a form of order rather than disorder, which is consistent with entropy.$\endgroup$ Anthony XJun 23, 2016 at 3:02

- $\begingroup$@hb20007 that is only correct if the system is adiabatically closed. If not, then there is no guarantee that the system entropy after equilibration is larger than before, and the increase of entropy in an adiabatically closed system is the fundamental law of nature that cannot be reduced to simpler statements. It is true of all kinds of equilibration not just of the temperature.$\endgroup$

Consider two tanks of water. One hot, one cold.

Recommended Reading: 21st Century Math Projects Csi Algebra Real Numbers Answers

The Physical Meaning Of Entropy

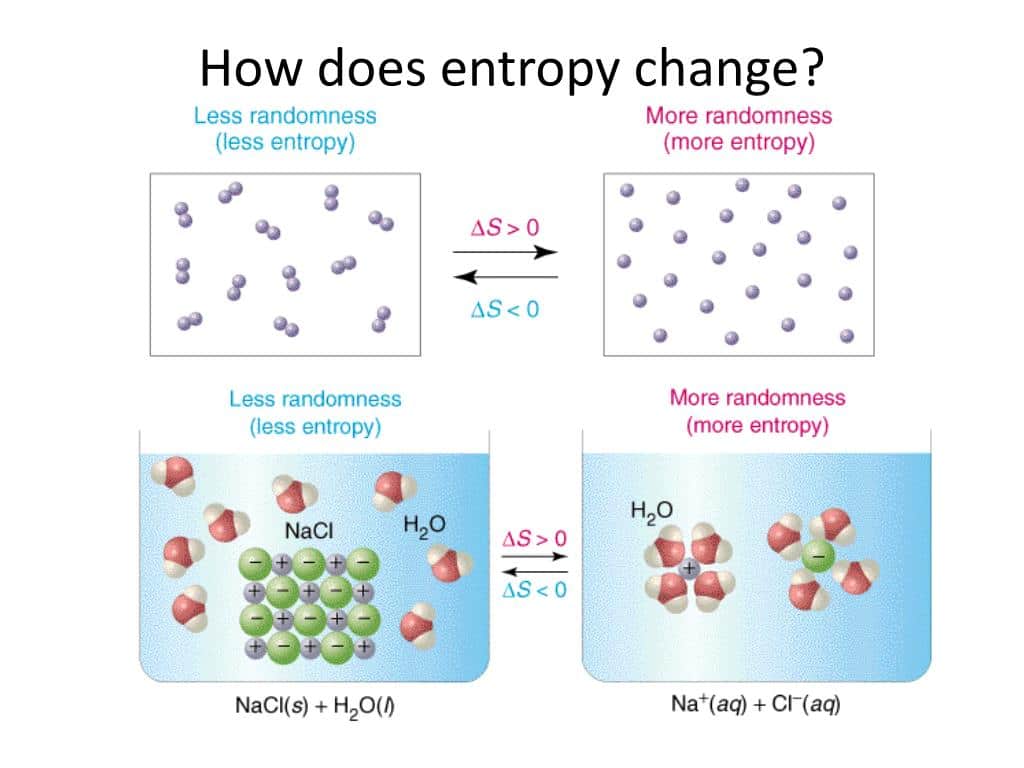

Entropy is a measure of the degree of spreading and sharing of thermal energy within a system.

This spreading and sharing can be spreading of the thermal energy into a larger volume of space or its sharing amongst previously inaccessible microstates of the system. The following table shows how this concept applies to a number of common processes.

Eddingtons Contribution To The Mess

As we stated in Section 1, the origin of the association of entropy with time is due to Clausius. However, the explicit association of entropy with the Arrow of Time is due to Arthur Eddington .

There are two very well-known quotations from Eddingtons book, The Nature of the Physical World. One concerns the role of entropy and the Second Law, and the second, introduces the idea of times arrow.

The law that entropy always increases, holds, I think, the supreme position among the laws of Nature. If someone points out that your pet theory of the universe is in disagreement with Maxwells equationsthen so much the worse for Maxwells equations. If it is found to be contradicted by observationwell, these experimentalists do bungle things sometimes. But if your theory is found to be against the second law of thermodynamics I can give you no hope there is nothing for it but to collapse in deep humiliation.

Let us draw an arrow arbitrarily. If as we follow the arrow we find more and more of the random element in the state of the world, then the arrow is pointing towards the future if the random element decreases the arrow points towards the past. That is the only distinction known to physics. This follows at once if our fundamental contention is admitted that the introduction of randomness is the only thing which cannot be undone. I shall use the phrase times arrow to express this one-way property of time which has no analogue in space.

In Rifkins book we find:

You May Like: What Is Oh In Biology

Solving Problems Involving The Second Law Of Thermodynamics

Entropy is related not only to the unavailability of energy to do work it is also a measure of disorder. For example, in the case of a melting block of ice, a highly structured and orderly system of water molecules changes into a disorderly liquid, in which molecules have no fixed positions . There is a large increase in entropy for this process, as we’ll see in the following worked example.

The Distribution Of Energy

To obtain some idea of what entropy is, it is helpful to imagine what happens when a small quantity of energy is supplied to a very small system. Suppose there are 20 units of energy to be supplied to a system of just 10 identical particles. The average energy is easily calculated to be two units per particle. Although this is the average energy, this state of the system can only arise if every particle has two units of energy. If all the energy were concentrated in one particle, any one of the 10 particles could take it up and this state of the system is 10 times more probable than every particle having two units each.

If the energy is shared between two molecules, half of the energy could be given to any one of the 10 particles and the other half to any one of the remaining nine particles, giving 90 ways in which this state of the system could be achieved. However, since the particles are indistinguishable, there would be only 45 ways in which this state can be achieved. Sharing the energy equally between four particles increases the number of possible ways this energy can be distributed to 210. This kind of calculation quickly becomes very tedious, even for a very small system of only 10 particles, and completely impracticable for systems where the number of particles approaches the number of atoms/molecules in even a very small fraction of one mole.

Figure 1 – Boltzmann distribution of the number of particles with energy E at low, medium and high temperatures

Don’t Miss: What Is Ots In Organic Chemistry

What Is Entropy Definition And Examples

Entropy is a key concept in physics and chemistry, with application in other disciplines, including cosmology, biology, and economics. In physics, it is part of thermodynamics. In chemistry, it is part of physical chemistry. Here is the entropy definition, a look at some important formulas, and examples of entropy.

- Entropy is a measure of the randomness or disorder of a system.

- Its symbol is the capital letter S. Typical units are joules per kelvin .

- Change in entropy can have a positive or negative value.

- In the natural world, entropy tends to increase. According to the second law of thermodynamics, the entropy of a system only decreases if the entropy of another system increases.

Key Takeaways: Standard Molar Entropy

- Standard molar entropy is defined as the entropy or degree of randomness of one mole of a sample under standard state conditions.

- Usual units of standard molar entropy are joules per mole Kelvin .

- A positive value indicates an increase in entropy, while a negative value denotes a decrease in the entropy of a system.

Also Check: Who Made Prodigy Math Game

Key Concepts And Summary

Entropy is a state function that can be related to the number of microstates for a system and to the ratio of reversible heat to kelvin temperature. It may be interpreted as a measure of the dispersal or distribution of matter and/or energy in a system, and it is often described as representing the disorder of the system.

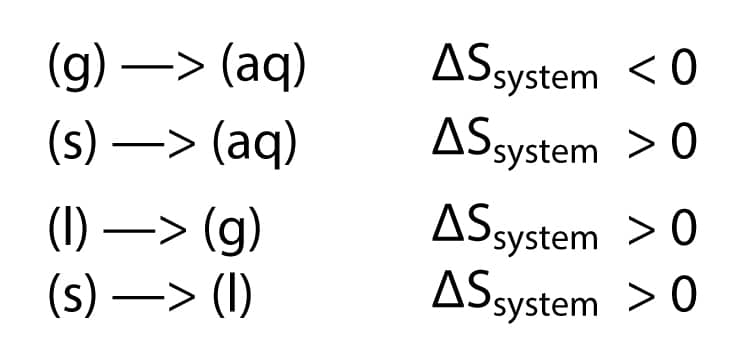

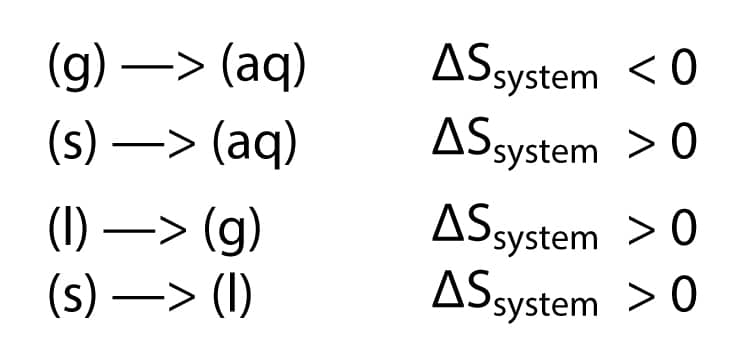

For a given substance, Ssolid< Sliquid< Sgas in a given physical state at a given temperature, entropy is typically greater for heavier atoms or more complex molecules. Entropy increases when a system is heated and when solutions form. Using these guidelines, the sign of entropy changes for some chemical reactions may be reliably predicted.

Relationship Between Enthalpy And Entropy

In order to define the relationship that exists between entropy and enthalpy, we need to introduce a new concept: The Gibbs free energy

Gibbs Free Energy is used to measure the amount of available energy that a chemical reaction provides. As reactions are usually temperature dependent, and sometimes work significantly better at some temperatures than others, the Gf° values known are only valid at 25°C .

Similar to the equations for H and S for a system, G is defined as the difference between the sum of the free energy of formation values of the products and reactants:

G reaction = G products – G reactantsor simplified

G = G – G

If a reaction is not spontaneous, its G will be positive. If a reaction is spontaneous, its G will be negative.

It’s important to note that spontaneous does not necessarily mean that the specific reaction proceeds at high rate. A spontaneous reaction can take ages to go to completion. A classic example is the rusting of metal.

Going back to enthalpy and entropy, we can define the relationships between these two values, correlating them with the Gibbs free energy. For all temperatures, including 25°C, the following equation can be used to determine spontaneity of a chemical reaction:

G = H TS

This equation is valid only if:

- The temperature is in Kelvin, which is done by adding 273.15 to the Celsius temperature.

- S of the reaction is converted to kJ/K.

Recommended Reading: What Is Mnemonics In Psychology

Positive And Negative Entropy

The Second Law of Thermodynamics states the entropy of isolated system increases, so you might think entropy would always increase and that change in entropy over time would always be a positive value.

As it turns out, sometimes entropy of a system decreases. Is this a violation of the Second Law? No, because the law refers to an isolated system. When you calculate an entropy change in a lab setting, you decide on a system, but the environment outside your system is ready to compensate for any changes in entropy you might see. While the universe as a whole , might experience an overall increase in entropy over time, small pockets of the system can and do experience negative entropy. For example, you can clean your desk, moving from disorder to order. Chemical reactions, too, can move from randomness to order. In general:

Sgas > Ssoln > Sliq > Ssolid

So a change in state of matter can result in either a positive or negative entropy change.

Entropy: The Hidden Force That Complicates Life

Entropy, a measure of disorder, explains why life seems to get more, not less, complicated as time goes on.

All things trend toward disorder. More specifically, the second law of thermodynamics states that as one goes forward in time, the net entropy of any isolated or closed system will always increase .

Entropy is simply a measure of disorder and affects all aspects of our daily lives. In fact, you can think of it as natures tax.

Left unchecked disorder increases over time. Energy disperses, and systems dissolve into chaos. The more disordered something is, the more entropic we consider it. In short, we can define entropy as a measure of the disorder of the universe, on both a macro and a microscopic level. The Greek root of the word translates to a turning towards transformation with that transformation being chaos.

Nor public flame, nor private, dares to shine Nor human spark is left, nor glimpse divine!Lo! thy dread empire, Chaos! is restored Light dies before thy uncreating word:Thy hand, great Anarch! lets the curtain fall And universal darkness buries all.

Alexander Pope, The Dunciad

Lets take a look at what entropy is, why it occurs, and whether or not we can prevent it.

Read Also: Gradpoint Geometry B Posttest Answers

Internal Energy And Entropy

Internal energy is the energy contained within a thermodynamic system. It is an extensive property and thus cannot be measured directly. To find the value of a systems internal energy, we can use the following equation:

U = q + w

Where, U = the change in the internal energy of a system, q = the heat energy exchanged between a system and its surroundings, and w = the work done by the system.

Examples Of Entropy In A Sentence

entropy Scientific AmericanentropyForbesentropyScientific AmericanentropyNew York Timesentropy Forbesentropy Quanta Magazineentropy Quanta MagazineentropyQuanta Magazine

These example sentences are selected automatically from various online news sources to reflect current usage of the word ‘entropy.’ Views expressed in the examples do not represent the opinion of Merriam-Webster or its editors. Send us feedback.

You May Like: What Is Linguistic Determinism In Psychology

What Standard Molar Entropy Means

- Ph.D., Biomedical Sciences, University of Tennessee at Knoxville

- B.A., Physics and Mathematics, Hastings College

You’ll encounter standard molar entropy in general chemistry, physical chemistry, and thermodynamics courses, so it’s important to understand what entropy is and what it means. Here are the basics regarding standard molar entropy and how to use it to make predictions about a chemical reaction.

Entropy And The Second Law Of Thermodynamics

The second law of thermodynamics states the total entropy of a closed system cannot decrease. For example, a scattered pile of papers never spontaneously orders itself into a neat stack. The heat, gases, and ash of a campfire never spontaneously re-assemble into wood.

However, the entropy of one system can decrease by raising entropy of another system. For example, freezing liquid water into ice decreases the entropy of the water, but the entropy of the surroundings increase as the phase change releases energy as heat. There is no violation of the second law of thermodynamics because the matter is not in a closed system. When the entropy of the system being studied decreases, the entropy of the environment increases.

Don’t Miss: What Does Hl Stand For In Geometry

Is The Second Law Wrong

It is not difficult to find examples of chemical reactions that appear to contradict the rule that entropy increases in spontaneous processes.

Take, for example, the demonstration illustrated in the video below, where the two gases, hydrogen chloride and ammonia, diffuse along a tube and produce a white ring of solid ammonium chloride:

HCl + NH3 NH4 Cl

Hydrogen chloride and ammonia gases diffuse along a tube and react to produce a ring of solid ammonium chloride.

The two gases forming a solid clearly involve a decrease in entropy, yet the reaction occurs spontaneously.

In fact, we can calculate the numerical value of the entropy change from the figures in the table above :

- Total entropy of starting materials 187 + 192 = 379 J K-1 mol-1

- Entropy of product = 95 J K-1 mol-1

- Entropy change = 284 J K-1 mol-1

As expected, a significant decrease. Does this mean the second law is wrong?

How Did Entropy Became Associated With Time

Open any serious textbook on thermodynamics, and you will find that Entropy is a state function. This means that entropy is defined for equilibrium states. Therefore, it is clear that it has nothing to do with time. Yet, surprisingly many authors associate entropy with time, and some even equate entropy to time.

The association of entropy with the Arrow of Time has been discussed in great detail in my books: The Briefest History of Time, and Times Arrow The Timeless Nature of Entropy and the Second Law . In this Section, I will summarize my views on the origin of, and the reasons for this distorted association of entropy with time. I will also present some outstanding quotations from the literature. More details are provided in the reference .

Open any book written by physicists which deals with a Theory of Time,Times beginning, and Times ending, and you will most likely find a lengthy discussion about association of entropy and the Second Law of Thermodynamics with time. The origin of this association of Times Arrow with entropy can be traced to Clausius s famous statement of the Second Law:

The entropy of the universe always increases.

The concept of Times Arrow and its explicit association with the Second Law is attributed to Eddington, see Section 2.1. However, the association of the Second Law with time can be traced back to Boltzmann who related the one-way property of time with the Second Law, see Section 2.2.

Read Also: What Does 3 Dots Mean In Math

The Law Of Thermodynamic

-First law: Energy is conserved it can be neither created nor destroyed.

-Second law: In an isolated system, natural processes are spontaneous when they lead to an increase in disorder, or entropy.

-Third law: The entropy of a perfect crystal is zero when the temperature of the crystal is equal to absolute zero .

Predicting The Sign Of S

The relationships between entropy, microstates, and matter/energy dispersal described previously allow us to make generalizations regarding the relative entropies of substances and to predict the sign of entropy changes for chemical and physical processes. Consider the phase changes illustrated in . In the solid phase, the atoms or molecules are restricted to nearly fixed positions with respect to each other and are capable of only modest oscillations about these positions. With essentially fixed locations for the systems component particles, the number of microstates is relatively small. In the liquid phase, the atoms or molecules are free to move over and around each other, though they remain in relatively close proximity to one another. This increased freedom of motion results in a greater variation in possible particle locations, so the number of microstates is correspondingly greater than for the solid. As a result, Sliquid> Ssolid and the process of converting a substance from solid to liquid is characterized by an increase in entropy, S> 0. By the same logic, the reciprocal process exhibits a decrease in entropy, S< 0.

Try this simulator with interactive visualization of the dependence of particle location and freedom of motion on physical state and temperature.

Considering the various factors that affect entropy allows us to make informed predictions of the sign of S for various chemical and physical processes as illustrated in .

\\phantom}\phantom}}_}\left\)

Recommended Reading: What Math Do You Take In 11th Grade