What Is Primary Reinforcement And How Does It Work

The physiologically significant reinforcers are referred to as Primary Reinforcers. Unconditional reinforcement is another name for it. These reinforcers appear without any effort on the part of the learner and do not need any kind of learning. Food, sleep, water, air, and sex are just a few examples.

Schedules Of Reinforcement Psychology

By: Author Pamela Li, MS, MBAPamela Li is a bestselling author. She is the Founder and Editor-in-Chief of Parenting For Brain. Her educational background is in Electrical Engineering and Business Management . Learn more

Posted on Last updated: Aug 26, 2022Evidence Based

Schedules of reinforcement can affect the results of operant conditioning, which is frequently used in everyday life such as in the classroom and in parenting. Lets examine the common types of schedule and their applications.

Ial Schedules Of Reinforcement

Once a new behavior is learned, trainers often turn to another type of schedule partial or intermittent reinforcement schedule to strengthen the new behavior.

A partial or intermittent reinforcement schedule rewards desired behaviors occasionally, but not every single time.

Behavior intermittently reinforced by a partial schedule is usually stronger. It is more resistant to extinction . Therefore, after a new behavior is learned using a continuous schedule, an intermittent schedule is often applied to maintain or strengthen it.

Many different types of intermittent schedules are possible. The four major types of intermittent schedules commonly used are based on two different dimensions time elapsed or the number of responses made . Each dimension can be categorized into either fixed or variable.

The four resulting intermittent reinforcement schedules are:

- Fixed interval schedule

- Variable ratio schedule

Don’t Miss: What Are The Limitations And Legal Ramifications Of Psychological Restraint

Example Of Fixed Interval Reinforcement

Fixed interval reinforcement is distribute after a fixed amount of time. Paychecks are often considered the most common form of fixed interval reinforcement. Another example of this schedule is administering allowance to a child if they clean their room They only need to clean their room once. If they choose to do it Monday or Friday, it doesnt matter. As long as the room has been cleaned once that week, they receive the reinforcement.

What Is A Variable

The American Psychological Association defines a variable-ratio schedule as “a type of intermittent reinforcement in which a response is reinforced after a variable number of responses.”

Schedules of reinforcement play a central role in the operant conditioning process. The frequency with which a behavior is reinforced can help determine how quickly a response is learned as well as how strong the response might be. Each schedule of reinforcement has its own unique set of characteristics.

Don’t Miss: What Is Work Measured In Physics

How To Identify A Variable

When identifying different schedules of reinforcement, it can be helpful to start by looking at the name of the individual schedule itself. In the case of variable-ratio schedules, the term “variable” indicates that reinforcement is delivered after an unpredictable number of responses.”Ratio” suggests that the reinforcement is given after a set number of responses. Together, the term means that reinforcement is delivered after a varied number of responses.

It might also be helpful to contrast the variable-ratio schedule of reinforcement with the fixed-ratio schedule of reinforcement. In a fixed-ratio schedule, reinforcement is provided after a set number of responses.

For example, in a variable-ratio schedule with a VR 5 schedule, an animal might receive a reward for every five responses, on average. One time, the reward would come after three responses, then seven responses, then five responses, and so on. The reinforcement schedule will average out to be rewarded for every five responses, but the actual delivery schedule will remain unpredictable.

In a fixed-ratio schedule, on the other hand, the reinforcement schedule might be set at a FR 5. This would mean that for every five responses, a reward is presented. Where the variable-ratio schedule is unpredictable, the fixed-ratio schedule is set and predictable.

-

Reinforcement provided after a varying number of responses

-

Delivery schedule unpredictable

-

Examples include slot machines, door-to-door sales, video games

Basic Terms In Operant Conditioning

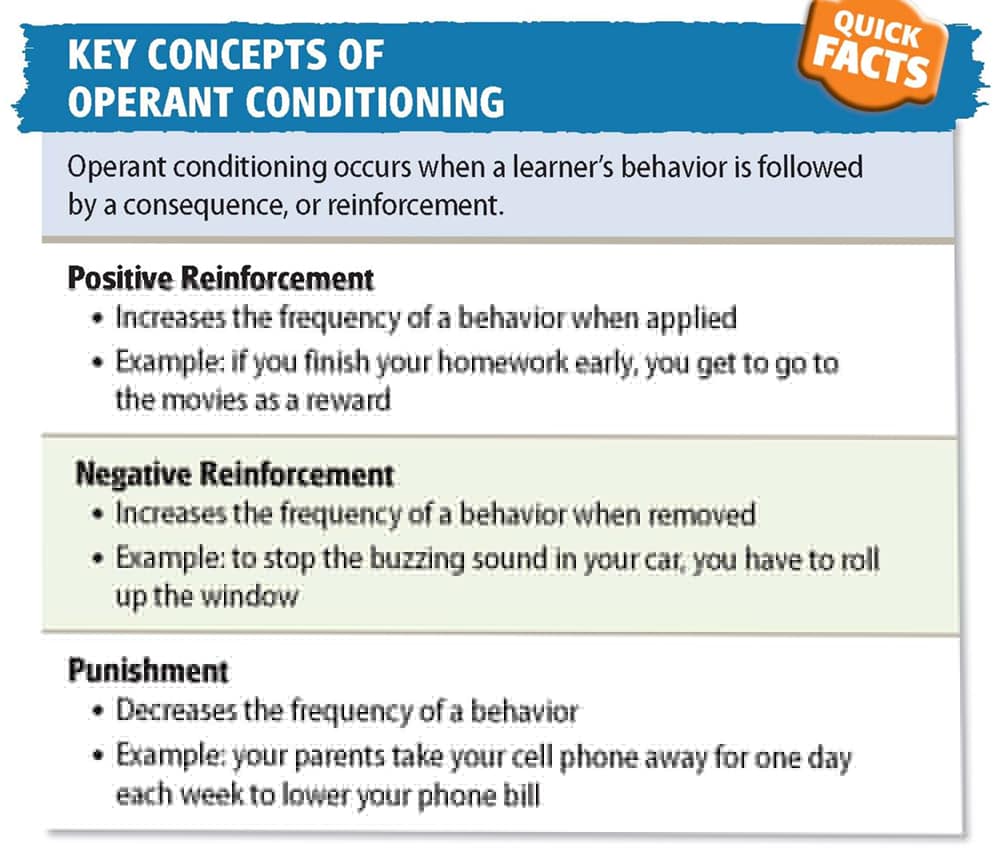

The subject is the person who is performing the behavior. Reinforcement may not be positive theyre something added to a situation that encourages the subject to perform a behavior.

Positive reinforcements are something like a paycheck the subject is given money to encourage them to come to work. Negative reinforcement is the removal of a stimulus that encourages the subject to behave a certain way.

For example, you may set an alarm in the morning to encourage you to wake up. If you put that alarm clock on the other side of the room, you have to get up in order to turn off the alarm. The removal of the blaring alarm will encourage you to wake up in the mornings.

Don’t Miss: What Is Cpt Code For Psychological Testing

Intermittent Schedules Of Reinforcement

In intermittent schedules of reinforcement, reinforcement is not provided for every instance of the behavior . Intermittent schedules are used to maintain behaviors that you have already taught. Once you have taught Jane to say please, you will use an intermittent schedule so Jane continues to say please in the future.

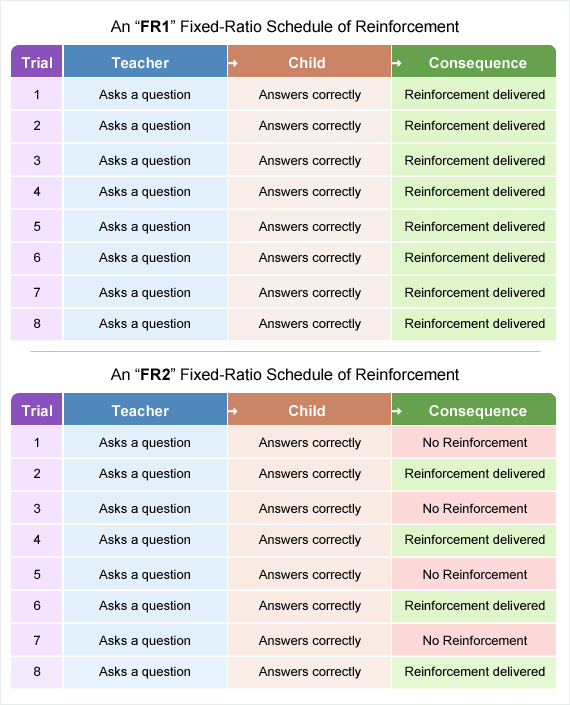

There are four types of intermittent schedules that you can use in order to maintain the behavior 1) fixed ratio, 2) fixed interval, 3) variable ratio, and 4) variable interval.

Fixed Ratio:

In a fixed ratio schedule, a specific or fixed number of behaviors must occur before you provide reinforcement. Example: You provide Jane with praise every fifth time Jane says please. Reinforcing please every fifth time means saying please is on an FR 5 schedule.

Fixed Interval: In a fixed interval schedule, the first behavior is reinforced after a specific or fixed amount of time has passed. Example: You provide Jane with praise the first time she says please after 60 minutes have passed. Reinforcing please after 60 minutes have passed means please is on an FI 60 schedule.

Variable Interval: In variable interval schedule, the first behavior is reinforced after an average amount of time has passed. Example: You provide Jane praise the first time she says please after about every 55, 60 or 65 minutes. Reinforcing please after an average of 60 minutes have passed means please is on a VI 60 schedule.

Follow Our Socials!

Schedules Of Reinforcement In Parenting

Many parents use various types of reinforcement to teach new behavior, strengthen desired behavior or reduce undesired behavior.

A continuous schedule of reinforcement is often the best in teaching a new behavior. Once the response has been learned, intermittent reinforcement can be used to strengthen the learning.

Reinforcement Schedules Example

Lets go back to the potty-training example.

When parents first introduce the concept of potty training, they may give the toddler a candy whenever they use the potty successfully. That is a continuous schedule.

After the child has been using the potty consistently for a few days, the parents would transition to only reward the behavior intermittently using variable reinforcement schedules.

Sometimes, parents may unknowingly reinforce undesired behavior.

Because such reinforcement is unintended, it is often delivered inconsistently. The inconsistency serves as a type of variable reinforcement schedule, leading to a learned behavior that is hard to stop even after the parents have stopped applying the reinforcement.

Variable Ratio Example in Parenting

When a toddler throws a tantrum in the store, parents usually refuse to give in. But once in a while, if theyre tired or in a hurry, they may decide to buy the candy, believing they will do it just that one time.

This is one reason why consistency is important in disciplining children.

Don’t Miss: Why Do We Dream Psychology

A Brief Description Of The Schedules Of Positive Reinforcement And Their Effects On Behavior

A Brief Description of the Schedules of Positive Reinforcement and Their Effects on Behavior.

Continuous reinforcement is when behavior is reinforced each time it occurs, one reinforcer for one response schedule. Because each operant is reinforced the increase in the rate of behavior is rapid. However, with continuous reinforcement the animal responds until it is satiated. Continuous reinforcement offers little resistance to extinction and produces stereotyped response topography . Continuous reinforcement is rare in a natural environment where most behavior is reinforced on an intermittent schedule .

An alternative to continuous reinforcement is intermittent schedules of reinforcement. With intermittent schedules of reinforcement only some, not all, behavioral responses are reinforced. Intermittent schedules include ratio schedules of reinforcement and interval schedules of reinforcement. Ratio schedules of reinforcement are based on a set number of responses given prior to reinforcement whereas interval schedules operate on a set amount of time having passed prior to reinforcement being delivered. Both ratio and interval schedules can be on a fixed or a variable, random schedule of reinforcement .

Thinner And Thicker Schedules Of Reinforcement

Sometimes you might hear the term thicker schedule of reinforcement or thinner schedule of reinforcement. These terms are used to describe a change that may be made to a schedule of reinforcement already being used.

For example, if a teaching programme was using an FR10 schedule , then a thinner schedule would mean increasing the amount of correct responses needed to earn reinforcement so the amount of reinforcement is reduced or thinned. Think of thinner in terms of less reinforcement. So for example, a thinner schedule than an FR10 schedule might be an FR15 schedule, so the child would now have to get 15 correct responses before earning reinforcement.

A thicker schedule would mean decreasing the amount of correct responses needed to earn reinforcement so the amount of reinforcement is increased. Think of thicker in terms of more reinforcement. So a thicker schedule than an FR10 might be an FR5 schedule, so the child would now have to get only 5 correct responses before earning reinforcement. Sometimes the term denser schedule of reinforcement might be used to denote a thicker schedule but these terms mean the same thing.

Also Check: What Does Pictorial Mean In Math

Implications For Behavioral Psychology

In his article âSchedules of Reinforcement at 50: A Retroactive Appreciation,â Morgan describes the ways in which schedules of reinforcement are being used to research important areas of behavioral science.

Choice Behavior

Choice Behavior

behaviorists have long been interested in how organisms make choices about behavior â how they choose between alternatives and reinforcers. They have been able to study behavioral choice through the use of concurrent schedules.

Through operating two separate schedules of reinforcement simultaneously, researchers are able to study how organisms allocate their behavior to the different options.

An important discovery has been the matching law, which states that an organismâs response rates to a certain schedule will closely follow the ratio that reinforcement has been obtained.

For instance, say that Joeâs father gave Joe money almost every time Joe asked for it but Joeâs mother almost never gave Joe money when he asked for it. Since Joeâs response of asking for money is reinforced more often when he asks his father, he is more likely to ask his father rather than his mother for money.

Research has found that individuals will try to choose behavior that will provide them with the largest reward. There are also further factors that impact an organismâs behavioral choice: rate of reinforcement, quality of reinforcement, delay to reinforcement and response effort.

Behavioral Pharmacology

Behavioral Pharmacology

What Is Fixed Interval Reinforcement

A Fixed Interval Schedule provides a reward at consistent times. Forexample a child may be rewarded once a week if their room is cleaned up. Aproblem with this type of reinforcement schedule is that individuals tend to wait until the time when reinforcement will occur and thenbegin their responses .

You May Like: What Is Principal In Math

Other Schedules Of Reinforcement

As you were listening to that last example, you might find yourself thinking of an alternative way to reinforce your behavior. You might put yourself on a different schedule: for example, if you packed your lunch three times that week, you get the big chocolate bar on Friday. This is an intuitive way of reinforcing this behavior, but its not on a fixed-ratio reinforcement schedule. Instead, this is a fixed-variable reinforcement schedule.

In addition to a fixed-ratio reinforcement schedule, psychologists have identifiedFixed Interval,Variable Interval, andVariable Ratio schedules. Below are quick examples of each type of schedule, but you can also find more information about these options on our site!

Example Of Variable Ratio Reinforcement

Like fixed ratio reinforcement, variable ratio reinforcement administers reinforcements after a certain number of behaviors are performed. Unlike fixed ratio reinforcement, that number can vary between when reinforcements are given out. Slot machines are a great example of variable ratio reinforcement. It might take one, twelve, or 1,000 pulls of a slot machine for the user to win the jackpot!

You May Like: How Do You Find Volume In Chemistry

Fixed Ratio Reinforcement Vs Continuous Reinforcement

If you set an alarm every morning, you arent operating on a fixed-ratio reinforcement schedule. If reinforcement is used every time you perform a behavior the subject is acting on a continuous reinforcement schedule. Continuous reinforcement schedules do work, but they arent always practical. You cant give customers a free coffee every time they buy a coffee, or else youd have to start doubling the price of coffee just to keep your business afloat! Instead of continuous reinforcement schedules, partial reinforcement schedules can be used to administer reinforcements in a more realistic way.

Fixed-ratio reinforcement is a partial reinforcement schedule. Subjects are not rewarded every time they perform a behavior just after they have performed the behavior a certain number of times.

Continuous Schedule Of Reinforcement

Within an educational setting, a CRF would mean that the teacher would deliver reinforcement after every correct response from their student/s. For example, if you were teaching a student to read the letters A, B, C, and D, then everytime you presented one of these letters to your student and they correctly read the letter then you would deliver reinforcement.

For an everday example, every time you press the number 9 button on your television remote control your TV changes to channel 9 or every time you turn on your kettle it heats up the water inside it or every time you turn on your kitchen tap water flows out of it .

Read Also: What Is Parallel Lines In Math

What Is An Example Of Variable Ratio In Psychology

In operant conditioning a variable-ratio schedule is a schedule of reinforcement where a response is reinforced after an unpredictable number of responses. 1 This schedule creates a steady high rate of responding. Gambling and lottery games are good examples of a reward based on a variable ratio schedule. See also how did italys geography encourage the spread of the renaissance

What Is Fixed Ratio Reinforcement

Fixed-ratio reinforcement is a schedule in which reinforcement is given out to a subject after a set number of responses. It is one of four partial reinforcement schedules that were identified by B.F. Skinner, the father of operant conditioning.

B.F. Skinner and operant conditioning are two terms that come from Behaviorism, the once predominant school of thought in psychology. Today, behaviorism no longer reigns supreme, but we can still find examples of operant conditioning and fixed ratio reinforcement in everyday life.

Read Also: Algebra Word Problems Worksheets 4th Grade

What Is Fixed Ratio Schedule Used For

In operant conditioning a fixed-ratio schedule reinforces behavior after a specified number of correct responses. This kind of schedule results in high steady rates of responding. Organisms are persistent in responding because of the hope that the next response might be one needed to receive reinforcement.

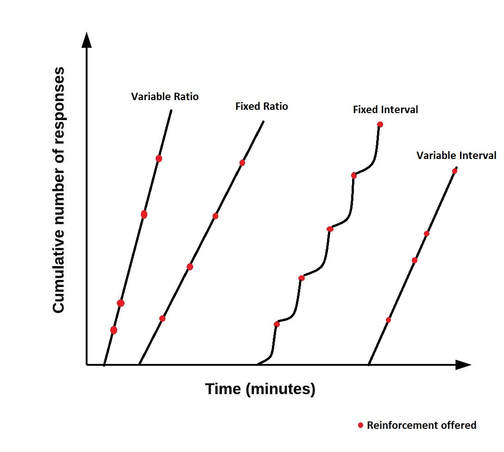

Response Rates Of Different Reinforcement Schedules

Response Rates of Different Reinforcement Schedules

Ratio schedules â those linked to number of responses â produce higher response rates compared to interval schedules.

As well, variable schedules produce more consistent behavior than fixed schedules unpredictability of reinforcement results in more consistent responses than predictable reinforcement .

Read Also: What Is Nc In Physics

What Is Intermittent Reinforcement In Psychology

Intermittent reinforcement is the delivery of a reward at irregular intervals a method that has been determined to yield the greatest effort from the subject. The subject does not receive a reward each time they perform a desired behavior or according to any regular schedule but at seemingly random intervals.

Interval Schedules With A Limited Hold

Both fixed-interval and variable-interval schedules of reinforcement might have what is called a limited hold placed on them. When a limited hold is applied to either interval schedule then reinforcement is only available for a set time period after the time intervals have ended.

For example, using an FI2 schedule with a limited hold of 10 seconds means that when the 2 minute time interval has ended the child must engage in the target behaviour within 10 seconds or the fixed-interval of 2 minutes will start again and no reinforcement would be delivered. The limited hold is abbreviated into LH so the example above would be written as FI2-minutes LH10-seconds or sometimes maybe FI2min LH10sec.

You May Like: Trek Madone 9.9 Geometry

Connect The Concepts: Gambling And The Brain

Skinner stated, If the gambling establishment cannot persuade a patron to turn over money with no return, it may achieve the same effect by returning part of the patrons money on a variable-ratio schedule .

Figure 2. Some research suggests that pathological gamblers use gambling to compensate for abnormally low levels of the hormone norepinephrine, which is associated with stress and is secreted in moments of arousal and thrill.

Skinner uses gambling as an example of the power of the variable-ratio reinforcement schedule for maintaining behavior even during long periods without any reinforcement. In fact, Skinner was so confident in his knowledge of gambling addiction that he even claimed he could turn a pigeon into a pathological gambler . It is indeed true that variable-ratio schedules keep behavior quite persistentjust imagine the frequency of a childs tantrums if a parent gives in even once to the behavior. The occasional reward makes it almost impossible to stop the behavior.

In addition to dopamine, gambling also appears to involve other neurotransmitters, including norepinephrine and serotonin . Norepinephrine is secreted when a person feels stress, arousal, or thrill. It may be that pathological gamblers use gambling to increase their levels of this neurotransmitter. Deficiencies in serotonin might also contribute to compulsive behavior, including a gambling addiction .