Subsection424elementary Row And Column Operations

The following are a series of facts about elementary row and column operations on an \ matrix \

-

The matrix \ is put in reduced row echelon form by a sequence of elementary row operations.

-

Each elementary row operation can be achieved by left multiplication of \ ) by an \ elementary matrix.

-

Each elementary column operation can be achieved by right multiplication of \ ) by an \ elementary matrix.

-

Every elementary matrix is invertible and its inverse in again an elementary matrix of the same type.

-

The rank of an \ matrix is unchanged by elementary row or column operations, that is \ = \rank\) and \=\rank\) for appropriately sized elementary matrices \

Every invertible matrix is a product of elementary matrices, and this leads to the

Algorithm4.2.4.

To determine whether an \ matrix \ is invertible and if so find its inverse, reduce to row-echelon form the “augmented” \ matrix

The matrix \ is invertible if and only if \ and in that case \ is the inverse \

ExercisesExercises

1.

Let \ be an \ matrix and \ an elementary matrix of the appropriate size.

-

Are the row spaces of \ and \ the same?

-

Are the column spaces of \ and \ the same?

-

If \ is the reduced row-echelon form of \ are the nonzero rows of \ a basis for the row space of \

-

If \ is the reduced row-echelon form of \ is the column space of \ the same as the column space of \

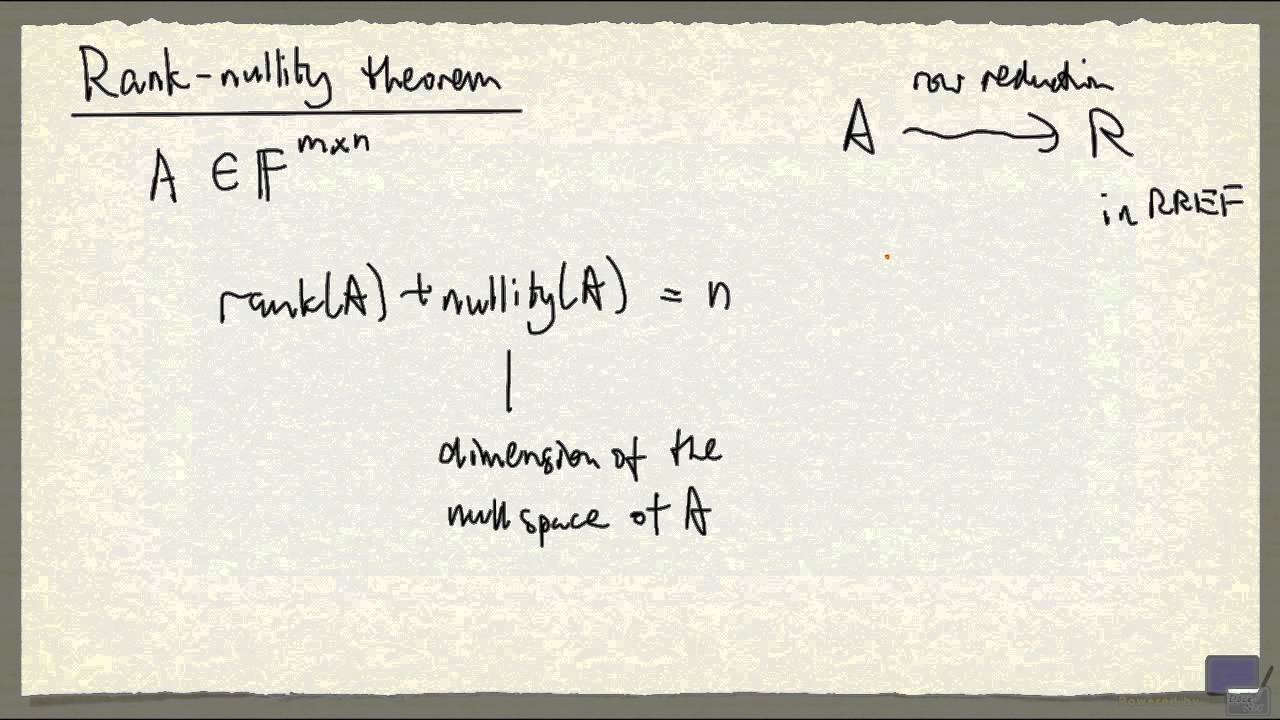

Rank + Nullity Theorem

Looking to give an example of a $4×5$ matrix A with $dim) = 3$. My thinking here is that by the $rank+null$ theorem, $rank+null= number of columns$. So the numbers of columns is $5$ so $ 5 = rank + 3 $. I got 3 from $dim) = 3$. So the rank of $A$ would be $2$. So this would just be a $4×5$ matrix with 2 leading 1’s.

Just wondering if my logic was correct. Can I get the null of the matrix from the statement $dim) = 3$ like I did?

$\DeclareMathOperator\DeclareMathOperator$$A$ is a map from $V=\mathbb^5$ to $W=\mathbb^4$. The theorem indeed says$$\rk + \nul = \dim \iff \\\rk + 3 = 5 \iff \\\rk = 2$$and$$A=\begin1 & 0 & 0 & 0 & 0 \\0 & 1 & 0 & 0 & 0 \\0 & 0 & 0 & 0 & 0 \\0 & 0 & 0 & 0 & 0\end$$would do the job.

Take $A=$.

let $\mathbf= \in\mathbb R^5$. Of course $A \mathbf \to $. The kernel of $A$ is the subspace $\mathbb R^3$ generated by vectors of the form $$, so it has dimension $3$. Alternatively, we can see that $\dim \mathbb R^5- =\dim $. But the rank is exactly $2$.

The “number of columns” you mention is the dimension of the domain for $A$ as a linear operator.

The number of linear dependencies give the dimension of the null space, or the things in the kernel of your map. Ultimately, in row echelon form, this is telling you how many columns are really just zeroes.

The number of linear independent vectors tells you the dimension of the image of $A$. These are the leading ones that you were thinking of.

you can ignore the rest if you haven’t had any algebra:

Proof Of Ranknullity Theorem

I’m trying to understand the proof of Ranknullity theorem,but there are parts that I don’t understand:

Steinitz exchange lemma

If $,\dots ,u_\}}$ is a set of $$ linearly independent vectors in a vector space $$, and $,\dots ,w_\}}$ span $$, then:

$$

There is a set $$ with $$ such that $$ spans $$.

Ranknullity theorem

Let $, $ be vector spaces, where $$ is finite dimensional. Let $$ be a linear transformation. Then

$$ +\operatorname =\dim V}$$

Proof

Let $$ be vector spaces over some field $ }$ and $$ defined as in the statement of the theorem with $$.

As $ T\subset V}$ is a subspace, there exists a basis for it. Suppose $ T=k}$

I know that $\text$ is a subset of a finite set $V$,and hence is finite,but how does this imply that $\text$ does have a basis?

and let $}:=\,\ldots ,v_\}\subset \operatorname }$be such a basis.

We may now, by the Steinitz exchange lemma, extend $}}$ with $$ linearly independent vectors $,\ldots ,w_}$ to form a full basis of $$.

$}:=\,\ldots ,w_\}\subset V\setminus \operatorname }$such that

$}:=}\cup }=\,\ldots ,v_,w_,\ldots ,w_\}\subset V}$is a basis for $$.

What is $V,W,W’,U$ here? Since the proof uses Steinitz exchange lemma,however I can’t recognize $V,W,W’,U$.

From this, we know that $ T=\operatorname T=\operatorname \),\ldots ,T,T,\ldots ,T\}=\operatorname \),\ldots ,T\}=\operatorname T}$

Why $\text\ T=\text \ T $?

And why $\operatorname \),\ldots ,T,T,\ldots ,T\}=\operatorname \),\ldots ,T\}$?

Thanks for your help.

Read Also: Geometry Segment And Angle Addition Worksheet

Introduction To Linear Algebra

I. IntroductionThis blog entry will expand on my previous entry on linear transformations. The Rank-Nullity Theorem will be introduced, as will the notion of inverting linear transformations and vector space isomorphisms.

II. RankThe range of a linear transformation, denoted , is defined as the subset of the codomain that is mapped. This can easily be determined form the matrix representation of , based on the largest subset of linearly independent column vectors from the matrix. The cardinality of this set is called the rank. The rank property describes the space that spans.

, then there are two linearly independent column vectors in A. This means that the is a plane in , or that the set of column vectors in A describes a two-dimensional subspace of .

Lets look at a couple examples. Let .

and are linearly dependent. Thus, there are two linearly independent column vectors in A.

From here, the range of a linear transformation represented by the transformation matrix A is defined as .

to be defined as follows: .

Here, the column vectors are all in . Thus, by the linear independence tests, any set of vectors from with more than two elements is linearly dependent. As none of these vectors are multiples of each other, any subset of the column vectors with at most two elements is linearly independent. Thus, . The range, , of a linear transformation represented by is denoted by , though any two column vectors of can be used in defining the range.

Subsection423computing Rank And Nullity

Let \\) be a matrix. Then \= Ax\) defines a linear map \ Indeed in Subsection 4.3.2, we shall see how to translate the action of an arbitrary linear map between finite-dimensional vectors spaces into an action of a matrix on column vectors.

Let’s recall how to extract the image and kernel of the linear map \ We know that the image of any linear map is obtained by taking the span of \, \dots,T\) where \ is any basis for \ the domain. Indeed if we choose the \ to be the standard basis vectors th coordinate and zeroes elsewhere), then \\) is simply the \th column of the matrix \ Thus \\) is the column space of \ However to determine the rank of \ we would need to know which columns form a basis. We’ll get to that in a moment.

The nullspace of \ is the set of solutions to the homogeneous linear system \ You may recall that a standard method to deduce the solutions is to put the matrix \ in reduced row-echelon form. That means that all rows of zeros are at the bottom, the leading nonzero entry of each row is a one, and in every column containing a leading 1, all other entries are zero. These leading ones play several roles.

Proposition4.2.3.

Recommended Reading: Edgenuity Economics Unit Test Answers

Column Rank = Row Rank

Theorem.

The row space $\mathcal$ and column space $\mathcal$ of a matrix $A$ have the same dimension.

Proof.

Let $A \in \mathbb^$, and $r$ be the dimension of $\mathcal$.

Then, there exist $r$ number of basis on $\mathcal$. Say them as $\mathbf_1, \mathbf_2, \dots, \mathbf_r \in \mathbb^$.

We will check $A$ to be linearly independent in $\mathcal$.

Supp. $A$ are not linearly independent ,

then there exist not all zeros $c_i \in R$ s.t. $\displaystyle \sum^r_i _i} = 0$.

However, we can combine each $c_i$ with $\mathbf_i$,

Then, we build a vector $\mathbf = c_1 \mathbf_1, c_2 \mathbf_2, \dots, c_r \mathbf_r \in \mathcal$ and denote above situation as

$A\mathbf = 0$ means $\mathbf$ is orthogonal to every rows in $A$. Howevery, $\mathbf \in \mathcal$. Thus $\mathbf$ should be zero. $)

Then, $\mathbf = 0$ means all of $c_i = 0$

So, $A$ are linearly independent in $\mathcal$!!

Therefore, there are at least $r$ number of linear independent vectors in $\mathcal$!

Therefore,

$\blacksquare$

For any $A \in \mathbb^$,

Proof.

Supp. $\text = n$, then the only solution for $A \mathbf = 0$ is $\mathbf = 0$

Therefore, nullity $\dim ) = 0$, and given equation holds.

Supp. $\text = r < n$.

Then $\exists$ $n-r$ free variables in the solution of $A \mathbf = 0$.

Then, we can easily get $n-r$ number of vectors in $\mathcal$, by one-hot at position of only one free variable. These $n-r$ number of vectors are linearly independent. Also, these forms null space of $A$!! Therefore, $\dim ) = n-r$.