Title: Differential Geometry And Stochastic Dynamics With Deep Learning Numerics

Abstract: In this paper, we demonstrate how deterministic and stochastic dynamics onmanifolds, as well as differential geometric constructions can be implementedconcisely and efficiently using modern computational frameworks that mixsymbolic expressions with efficient numerical computations. In particular, weuse the symbolic expression and automatic differentiation features of thepython library Theano, originally developed for high-performance computationsin deep learning. We show how various aspects of differential geometry and Liegroup theory, connections, metrics, curvature, left/right invariance, geodesicsand parallel transport can be formulated with Theano using the automaticcomputation of derivatives of any order. We will also show how symbolicstochastic integrators and concepts from non-linear statistics can beformulated and optimized with only a few lines of code. We will then giveexplicit examples on low-dimensional classical manifolds for visualization anddemonstrate how this approach allows both a concise implementation andefficient scaling to high dimensional problems.

Applications Of Differential Geometry In Artificial Intelligence

I am new to this wonderful site. I searched around a bit but I couldn’t find any well-discussed posts on applications of differential geometry to artificial intelligence, or more generally to computer science.I came across Riemannian Geometry a few months back via YouTube and have been hooked on it since. I even bought a textbook and started learning from there. I am an engineer and I have sufficient math skills to make sense of the book, but it hardly has any real life applications. Almost everything is “Prove this” or “Theorem that”. So I wanted to know if there are any real-life implementable applications for CS. More specifically, in the field of AI and Machine Learning.

From what I have understood, differential geometry allows us to “see”,”understand” and “analyze” curves in higher dimensional spaces. Is this accurate? And can this help in AI and Machine Learning? In subtopics like Natural Language Processing, Robotics, Computer Vision, Data analysis?I would sure like to start off with a simple project which helps me understand differential geometry better.

Thanks

For applications of Differential Geometry in Computer Science, the following link is very useful:

It talks about :“Differential Geometric Methods for Shape Analysis and Activity Recognition”

Hope it helps !

There are certainly several areas in which differential geometry affects AI, particularly within machine learning and computer vision.

Intrinsic Geometry And Non

The field of differential geometry became an area of study considered in its own right, distinct from the more broad idea of analytic geometry, in the 1800s, primarily through the foundational work of Carl Friedrich Gauss and Bernhard Riemann, and also in the important contributions of Nikolai Lobachevsky on hyperbolic geometry and non-Euclidean geometry and throughout the same period the development of projective geometry.

Dubbed the single most important work in the history of differential geometry, in 1827 Gauss produced the Disquisitiones generales circa superficies curvas detailing the general theory of curved surfaces. In this work and his subsequent papers and unpublished notes on the theory of surfaces, Gauss has been dubbed the inventor of non-Euclidean geometry and the inventor of intrinsic differential geometry. In his fundamental paper Gauss introduced the Gauss map, Gaussian curvature, first and second fundamental forms, proved the Theorema Egregium showing the intrinsic nature of the Gaussian curvature, and studied geodesics, computing the area of a geodesic triangle in various non-Euclidean geometries on surfaces.

You May Like: Eoc Fsa Warm Ups Algebra 1 Answers

Differential Geometry For Machine Learning

In machine learning, we’re often interested in learning a function or a distribution that we can use to making predictions. In order to learn and apply such a function or distribution, we need to somehow represent it, i.e. put it into a form where we can do computations on it. In parametric modeling, we typically do this by choosing a finite set of parameters which fully characterize the predictor. Much of the time, the representation is merely a computational tool, and what we ultimately care about is the function or distribution itself.

As we’ll see below, the performance of many learning algorithms is very sensitive to the choice of representation. A lot of work has been devoted to coming up with clever reparameterizations which boost the performance of an algorithm. But this seems odd: if you’re working with the same class of predictors, why should the algorithm do something different just because you’re describing those predictors differently?

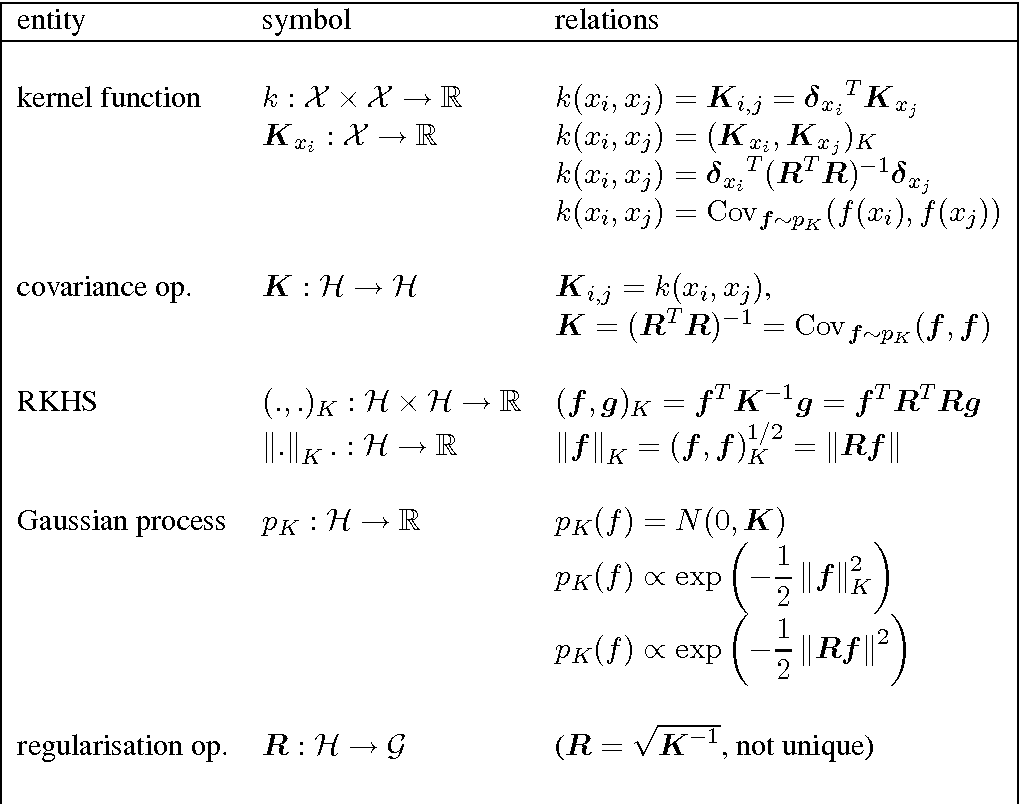

In fact, lots of algorithms have been carefully designed to be independent of the representation.Many of the biggest advances in the last 30 years of machine learning research can be seen as languages for talking directly about the predictors themselves without reference to an underlying parameterization. Examples include kernels, Gaussian processes, Bayesian nonparametrics, and VC dimension.

Manifold Learning: The Theory Behind It

Manifold Learning has become an exciting application of geometry and in particular differential geometry to machine learning. However, I feel that there is a lot of theory behind the algorithm that is left out, and understanding it will benefit in applying the algorithms more effectively .

We will begin this rather long exposition with what manifold learning is, and what are its applications to machine learning. Manifold learning is merely using the geometric properties of the data in high dimensions to implement the following things:

Where the notion of closeness is further refined using the distribution of the data. There are a few frameworks this is achieved with:

Recommended Reading: Geometry Segment Addition Postulate Worksheet

Differential Geometry In Reinforcement Learning

If you consider the link between reinforcement learning and control theory, then you can look at the “geometric control” literature, which is essentially what you’re asking for. Of course, a lot of the RL canon focuses on state and action spaces without any metric or topological structure . In any case, standard control theory relies on Euclidean metrics for state and action spaces and geometric control is the “correct” generalization of this to more general spaces. I don’t know how much of geometric control is used in industrial engineering applications vs just being academic. But as far as I know, this is the richest body of work on using differential geometry in control theory .

Bengio talks a lot about real-world data “living on a manifold” in the context of describing how DL works, and the whole concept behind denoising autoencoders borrow from differential geometry. Our work takes this one step further by saying that the data not only lives on a manifold, but that it moves on or near the manifold, which enforces much greater constraints in the spatiotemporal evolution of sequential real-world data. As @RocketshipRocketship mentions, this idea is at the core of geometric control theory, but much of that theory neglects both the potential of a learning controller and the powerful additional information density of signalling between nonlinear dynamical systems.

About This Research Topic

Traditional machine learning, pattern recognition and data analysis methods often assume that input data can be represented well by elements of Euclidean space. While this assumption has worked well for many past applications, researchers have increasingly realized that most data in vision and pattern …

Keywords:Differential geometry, topological data analysis, deep learning, time-series modeling

Important Note: All contributions to this Research Topic must be within the scope of the section and journal to which they are submitted, as defined in their mission statements. Frontiers reserves the right to guide an out-of-scope manuscript to a more suitable section or journal at any stage of peer review.

You May Like: Geometry Segment Addition Postulate Worksheet

Geomstats: A Python Package For Riemannian Geometry In Machine Learning

Nina Miolane, Nicolas Guigui, Alice Le Brigant, Johan Mathe, Benjamin Hou, Yann Thanwerdas, Stefan Heyder, Olivier Peltre, Niklas Koep, Hadi Zaatiti, Hatem Hajri, Yann Cabanes, Thomas Gerald, Paul Chauchat, Christian Shewmake, Daniel Brooks, Bernhard Kainz, Claire Donnat, Susan Holmes, Xavier Pennec; 21:19, 2020.

Geometric Data Processing Group

Geometry is a central component of algorithms for computer-aided design, medical imaging, 3D animation, and robotics. ;While early work in computational geometry provided basic methods to store and process shapes, modern geometry research builds on these foundations by assembling unstructured, noisy, and even probabilistic signals about shape into robust models capturing semantic, geometric, and topological features. ;Geometric techniques in two and three dimensions also can prove valuable in high dimensions: ;Distances and flows make sense when analyzing abstract data, from corpora of text to clicks on a website, suggesting that geometric inference applies far beyond shapes gathered from robotic sensors or virtual reality environments. ;Research in this domain demands theoretical grounding from modern differential geometry and topology while coping with the realities of uncertainty and incompleteness that appear in computation and statistics.

Merging these appearances of geometry in computation suggests the broad application of geometric data processing, in two senses:;

Our team’s research aims to widen the scope of “geometric data processing”;to encapsulate the theory, algorithms, and applications for shape processing applied to abstract datasets and 3D surfaces alike. ;

Also Check: Pre Algebra Road Trip Project

Classical Antiquity Until The Renaissance

The study of differential geometry, or at least the study of the geometry of smooth shapes, can be traced back at least to classical antiquity. In particular, much was known about the geometry of the Earth, a spherical geometry, in the time of the ancient Greek mathematicians. Famously, Eratosthenes calculated the circumference of the Earth around 200 BC, and around 150 AD Ptolemy in his Geography introduced the stereographic projection for the purposes of mapping the shape of the Earth. Implicitly throughout this time principles that form the foundation of differential geometry and calculus were used in geodesy, although in a much simplified form. Namely, as far back as Euclid‘s Elements it was understood that a straight line could be defined by its property of providing the shortest distance between two points, and applying this same principle to the surface of the Earth leads to the conclusion that great circles, which are only locally similar to straight lines in a flat plane, provide the shortest path between two points on the Earth’s surface. Indeed the measurements of distance along such geodesic paths by Eratosthenes and others can be considered a rudimentary measure of arclength of curves, a concept which did not see a rigorous definition in terms of calculus until the 1600s.

Learning From Geometric Data

Our team has proposed sensible;units for learning from geometric data based in theory, just as convolution was a part of image processing before appearing in neural networks. To this end, we have introduced architectures for several species of data, including point clouds, parametric shapes, and meshes. ;Our algorithms for learning from geometry are widely adopted, with unexpected applications; for example, our dynamic graph CNN model yields top results for inference problems in high energy physics.

You May Like: Holt Geometry Lesson 4.5 Practice B Answers

Applications Of Algebraic Geometry To Machine Learning

I am interested in applications of algebraic geometry to machine learning. I have found some papers and books, mainly by Bernd Sturmfels on algebraic statistics and machine learning. However, all this seems to be only applicable to rather low dimensional toy problems. Is this impression correct? Is there something like computational algebraic machine learning that has practical value for real world problems, may be even very high dimensional problems, like computer vision?

- 1Mar 19 ’16 at 23:34

- $\begingroup$I’m not an expert but I guess that low-rank matrix completion may provide an example.$\endgroup$

One useful remark is that dimension reduction is a critical problem in data science for which there are a variety of useful approaches. It is important because a great many good machine learning algorithms have complexity which depends on the number of parameters used to describe the data , so reducing the dimension can turn an impractical algorithm into a practical one.

This has two implications for your question. First, if you invent a cool new algorithm then don’t worry too much about the dimension of the data at the outset – practitioners already have a bag of tricks for dealing with it . Second, it seems to me that dimension reduction is itself an area where more sophisticated geometric techniques could be brought to bear – many of the existing algorithms already have a geometric flavor.

Motivation: Why Representation Independence

Why do we care if an algorithm’s behavior depends on the representation of the predictor? In order to motivate this, I’ll discuss a simple example, one which has nothing to do with differential geometry: polynomial regression. Suppose we wish to fit a cubic polynomial to predict a scalar target y as a function of a scalar input x. We can do this with linear regression, using the basis function expansion phi = .For a small dataset, we might get results such as the following:

The two figures show the same data, except that the one on the right has been shifted so that the x-axis goes from 8 to 12 rather than from -2 to 2. Observe that the fitted polynomial is the same . This happens because polynomial regression chooses the polynomial which minimizes the squared error, and polynomials can be shifted, i.e. replace p by p, where p denotes the polynomial. Notice that the one on the right has very large coefficients, because this shifting operation tends to blow up the coefficients when it is explicitly expanded out. This has no impact on the algorithm, at least in exact arithmetic.

Linear regression is invariant to parameterization, but the story changes once we try to regularize the model. The most common regularized version of linear regression is ridge regression, where we penalize the squared Euclidean norm of the coefficients. Now observe what happens when we try to fit the same data as above:

You May Like: Lesson 9.5 Geometry Answers

Differential Geometry In Computer Vision And Machine Learning

We are organizing the 5th International workshop on;Differential Geometry in Computer Vision and Machine Learning ;in conjunction with;CVPR 2020;to be held in Washington, Seattle in June 2020. We invite contributions for full length papers from you and your colleagues on the broad topic of topological methods and differential geometric approaches for computer vision, machine learning, and medical imaging.;

We are planning a full day workshop comprising both oral and poster presentations. You can find more information at;;along with the call for papers at;.

The workshop will be held on one of the days from June 16th to 18th June.;The paper should be 8 pages in length excluding references and the submission date is March 8th 2020. We will send out further emails when CVPR informs us the final date of the workshop.

The workshop will feature keynote lectures by the following distinguished speakers:

Dr.;Tamal Dey, Ohio State University, USA;Dr.;Guido Montufar, University of California Los Angeles, USADr.;Elizabeth Munch, Michigan State University, USADr.;Lek-Heng Lim, University of Chicago, USADr.;Pavan Turaga, Arizona State University, USA

We aim to foster interactions between engineers, mathematicians, statisticians, and computer scientists but also domain experts in the field of computer vision, biology, and medicine.;

Please email me if you have any questions.;Thank you,

Roadmap To Differential Geometry For Machine Learning

Recently within machine learning, there are a lot of works on non-convex optimization and natural gradients methods etc which are based on differential geometry, it gives rise to increased need to learn differential geometry in machine learning community.

After searching a bit, I found a possible routine might be:

John Lee’s book series in the order of

Introduction to Topological Manifolds -> Introduction to Smooth Manifolds -> Riemannian Manifolds: An Introduction to Curvature

Is it a “reasonable” path to enable one to be able to get ideas and possibly to apply new results from differential geometry community, and if we follow it, is it still necessary to read do Carmo’s two more texts that are mentioned a lot by many friends from math department. For practical reason, since machine learning research itself already takes majority amount of time, so that there is a preference not to read another books when it is not so necessary.

Recommended Reading: Exponential Growth And Decay Algebra 1 Worksheet